Choosing your AI stack

/ Arvid Andersson

Starting a new project or adding AI features to an existing product is an exciting endeavor. There are so many possibilities when it comes to deciding on providers, technologies, and implementation. One of the key parts is deciding a strategy for your infrastructure. But how should you start out thinking about this? In this blog post we will go through some of the key considerations to take into account when designing your AI infrastructure.

We will go through the following topics:

- What is the nature of your AI features?

- How important is inference speed?

- What about non-english support?

- What kind of observability is required?

- How is compliance and privacy handle in your AI stack?

I hope this blog post can give you inspiration and thoughts on how to think when designing the stack for your AI enabled projects. It is a new area of expertise that allow for a lot of new exciting questions and problems spaces, even for seasoned builders.

On-demand vs. background AI features

One key aspect of many AI interactions is that they require significant time to perform. Typically, more advanced models take longer and cost more, while smaller models are less expensive and deliver results more quickly. This might sound obvious, but often the implications for user experience and system architecture are overlooked. This oversight can lead to diverse requirements during the implementation phase.

On-demand processing is essential for features that require immediate responses, such as real-time workflows like support chatbots or suggesting improvments on a text. In contrast, background processing is suitable for tasks that are less time-sensitive, such as data analysis or content moderation.

When designing an AI feature or application, it is crucial to consider early on which components should be processed on-demand versus in the background. This distinction will provide valuable insights into both user experience and the technical architecture requirements.

For background processes, you can utilize batch processing, which is often less costly at some providers. Delegating tasks to high-capacity AI models like GPT-4 or LLaMA-70B is also feasible. Although these models are slower and more expensive, they yield better results due to their superior ability to follow instructions and generate text. For on-demand scenarios, numerous options allow smaller models to return good results quickly, especially when provided with relevant context and carefully crafted prompts. The choice of models and providers is broader when it comes to on-demand AI processes.

Understanding and deciding between on-demand and background processing from the start can significantly influence your decisions of AI infrastructure. By carefully selecting the appropriate models, processing strategies and providers, you can optimize both the cost and performance of AI features.

How to think about inference speed?

Inference speed refers to the time it takes for a AI model to process input and generate output. This speed is important for AI applications that require real-time interaction with users, ensuring that the application remains responsive and efficient.

Like mentioned previously high latency easily creates a less optimal user experience for on-demand features, leading to frustration and disengagement.

Use smaller models to reduce processing time, balancing the trade-off between speed and accuracy. One strategy can be to divide processes into multiple sub-processes that can more easily be completed with quality results by a smaller model and then use a larger model to create only the end result that the user is presented with.

If you have payloads that re-occurs it is generally a good idea to implement caching of common query results to avoid spending time and money on inference This can greatly lower the time it takes to generate results and reduce costs.

When choosing model runtime providers for your AI infrastructure make sure you have a good idea of what kind of inference times you can expect from your component alternatives, and match this with the needs of your application from the start. And remember, in this fast moving world of AI tech, its very likely that performance will increase in the near term, especially for the models that we view as top of the line right now.

A bet many builders make is that cost will be significantly reduced and inference speeds to increased in the near future, thus being able to use on a more costly infrastructure to start with.

Multilingual support in LLMs

Well-known large AI models like GPT-4 or Claude Opus generally offer good support for many non-English languages, outperforming open-source or lesser-known models that often yield subpar results in languages other than English. Historically, many models have focused on a limited subset of languages.

When developing applications that require multilingual capabilities, it is crucial to select AI models that works with the languages you need to support.

Its generally a good idea to thoroughly test potential models to evaluate how well they handle different languages. Benchmark models not only for quality but also for how they handle tone and syntax variations across languages. Its a good idea to create benchmarks that can be run repeatedly to be sure to have the ability to re-evaluate new models as they come along.

Opt for models that have demonstrated robust multilingual support if you need that. A model like OpenAIs GPT-4 or Anthropics Claude Opus are generally preferable as they are trained on diverse datasets that include multiple languages.

Like mentioned above, a strategy to handle cost and speed is using a tiered approach. Use smaller models to handle the bulk of the processing work, especially for tasks that are less language-sensitive or where perfect language accuracy is not critical. Then use larger models for creating the results that directly interact with users. This strategy ensures that the final user-facing content benefits from the high language capabilities of state-of-the-art models.

Do you need an observability and evaluation platform?

When selecting your AI stack, an important consideration is the integration of an observability and evaluation tool. These tools are used for monitoring and understanding the performance and quality of your AI application over time. Observability in AI involves tracking key metrics such as cost, inference time, and user feedback. Additionally, it usually involves building up a dataset of inputs and results for evaluation, which is essential for refining and optimizing the AI calls based on actual production data.

Many observability tools also come with features to collaborate on and evaluate prompts, as well as create datasets for fine-tuning models. This collaborative aspect enhances the development process by allowing team members to share insights and refine strategies collectively.

As AI providers usually come with a variable cost base on usage, having good insights into the performance and usage data becomes key in any production app that are aiming to scale up in any form. With it you get a way to ensure that you deliver consistent results and are up to date with upcoming bills.

Adding an observability platform usually involves adding middleware to your AI integrations. This middleware logs API calls and their results for subsequent processing and inspection. An advantage of adding this middleware is that it acts as a provider-agnostic layer between your application and the end APIs. This can simplify switching or evaluating providers in the future.

However, incorporating an additional layer through which user data passes necessitates careful consideration of privacy policies and end-user terms to ensure compatibility. One approach to mitigate privacy concerns is to opt for a self-hosted, open-source observability platform. This setup ensures that user data remains within your control and is not exposed to external parties. By adopting such a platform, you maintain greater control over data privacy and security, which is increasingly important in today's data-sensitive environment.

Proprietary and open-source AI models

Organizations often start with well-known proprietary models like those offered by OpenAI, which are widely used for their reliability and broad recognition, and many developers have started with these models to explore and learn due to their fame and ease of use. However, the open-source route is gaining traction among builders that seek flexibility and cost-effectiveness. As well as see the value of open-source. Today, numerous competent open-source models are available that offer performance comparable to proprietary models like GPT-3.5 and above, but often at a lower cost.

There are two main paths: self-hosting or using a third-party provider. Self-hosting involves a higher upfront investment in resources and infrastructure but grants complete control over the data and model customization. However the easiest way to get started is testing out one of hosted third party providers that takes care of all the complexities with running AI model inference in production.

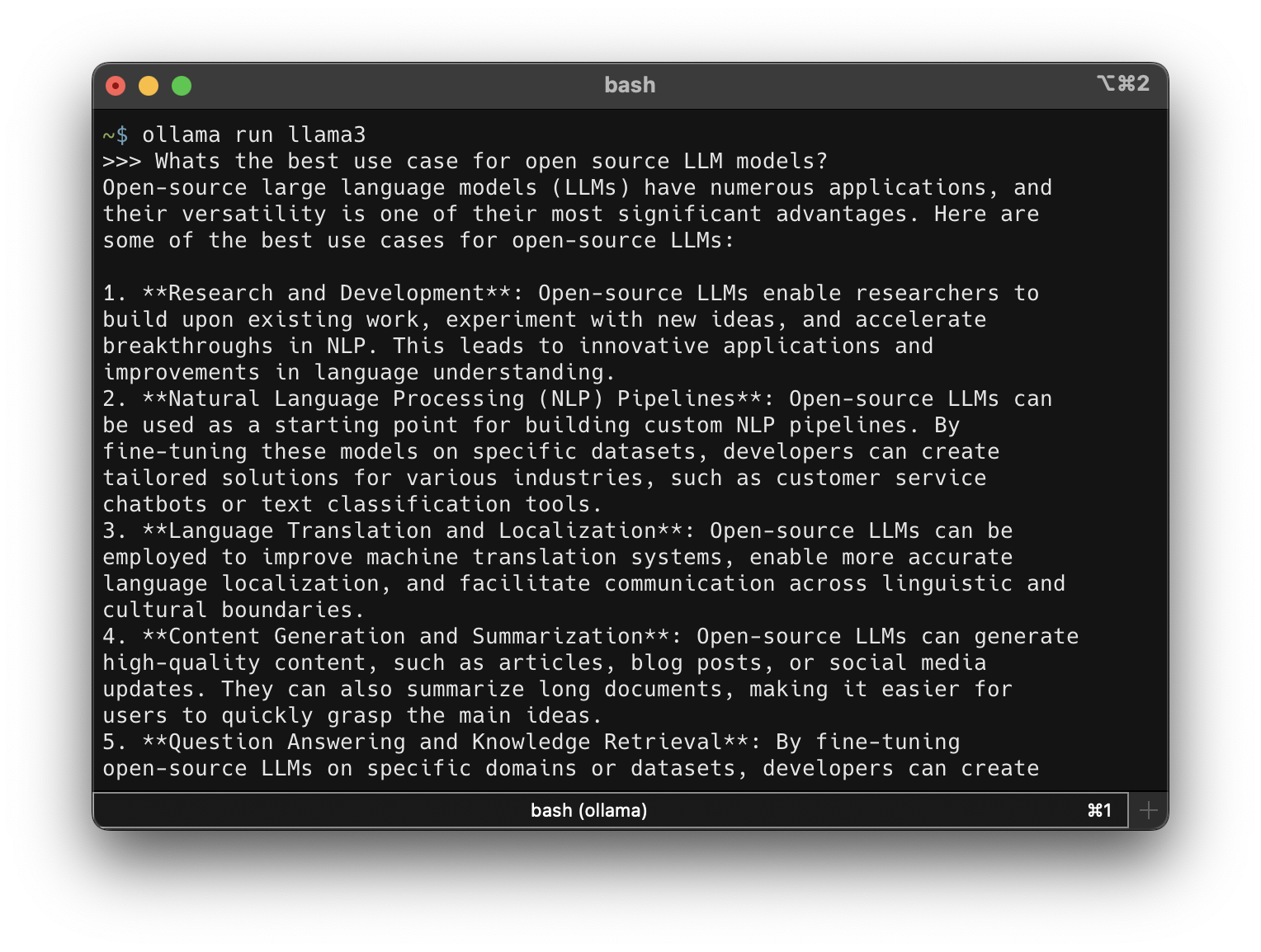

Running llama3 8b locally with Ollama.

Tools like Ollama are very useful for benchmarking these open-source models. It provides a range of models to run locally on your laptop and allows you to get a practical sense of their performance and characteristics.

Additionally, many inference providers for open-source AI model providers as well as Ollama support the same API formats as OpenAI. Which can simplify the process of testing different models and making it easier to integrate them into existing systems without extensive modifications.

In essence, the decision between proprietary and open-source AI models should be guided by your specific needs and constraints. Benchmark models options against your requirements to determine the best fit.

Compliance and Privacy in AI products?

Users and organizations are increasingly aware and concerned about data privacy, and they will likely have questions regarding the handling of their information as you scale up. As proprietary models and APIs gain access to user data, it is crucial to understand and transparently communicate how this data will be used. Compliance and privacy are significant trust factors in the AI space that are not to be underestimated – a common question is if the user data wil go into the training dataset for up coming models.

Going for open-source solutions and self-hosting is an attractive route that allows for complete control over user data as well as a differentiating factor. It offers a lot of interesting challenges for someone that has the resources, and offers significant long-term benefits in terms of data privacy and compliance flexibility.

Reviewing the data handling information provided by AI providers and aligning it with product needs might not be the most exciting part of setting up your AI infrastructure strategy, but doing it and having a good overview of the problem space allows for easier decision making and clearer set of requirements going forward.

Let’s start building

Exploring and optimizing your AI infrastructure stack is important in establishing a solid and predictable foundation for any product. Regularly evaluating and benchmarking your AI stack's components allows you to make informed decisions that keep pace with the rapidly evolving AI landscape.

When considering your stack options, always aim for the best user experience. Look to maximize user value within the constraints of your scope. Remember, choosing the right components for your AI infrastructure can significantly enhance your product's performance and scalability.

Finally, embrace the journey! Working with AI and shaping the future of problem solving is an exhilarating experience. This is a special time in the history of tech, full of opportunities for innovation and growth. Dive in, experiment, learn, and have fun 🔥

Is your product missing? 👀 Add it here →